Overview

This project implements a facial landmark detection system using the Xception model, trained on the ibug dataset. The goal is to accurately detect facial landmarks from images and provide a user-friendly web application for real-time detection.

This model will support real-time inference for applications such as:

- Face recognition

- Emotion detection

- Virtual makeup

- Augmented reality (AR)

Learning Process

Dataset used

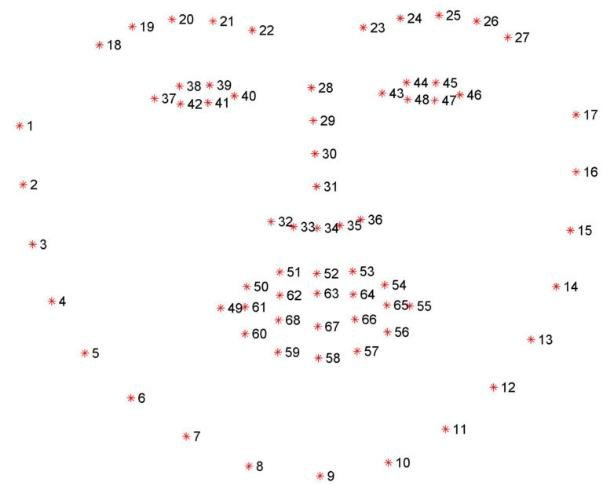

- Commonly Used Datasets:

- iBUG 300-W: Thousands of images labeled with 68 facial landmarks (eyes, nose, mouth, jawline).

- Applications: Face recognition, emotion analysis, augmented reality.

- Link for the dataset : here

Procedure

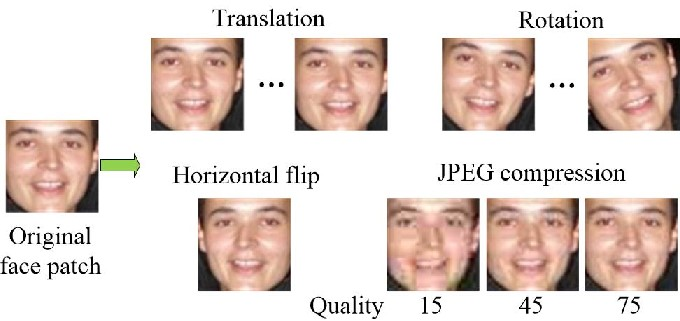

- Augmentation:

- Implemented a

FaceLandmarksAugmentationclass for tailored augmentation techniques:- Cropping

- Random cropping

- Random rotation

- Color jittering

- Key parameters include image dimensions, brightness, and rotation limits.

- Methods like

offset_cropandrandom_rotationadjust landmark coordinates accordingly.

- Implemented a

- Preprocessor:

- Initializes augmentation methods.

- Normalizes data.

- Dataset Class:

- Inherits from

Dataset. - Handles image paths, landmark coordinates, and cropping information parsed from XML files.

- Splits data into training and validation sets.

- Utilizes

DataLoaderfor batch processing.

- Inherits from

Network Design

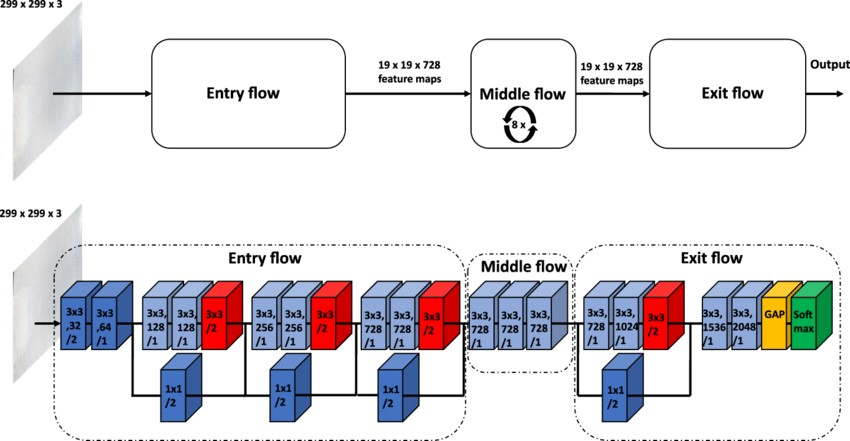

- Architecture:

- Modular CNN with depthwise separable convolutions to improve efficiency.

- Entry Block: Initial feature extraction.

- Middle Blocks: Residual connections.

- Exit Block: Outputs facial landmark coordinates.

- Enhancements:

- Batch normalization and LeakyReLU for better performance.

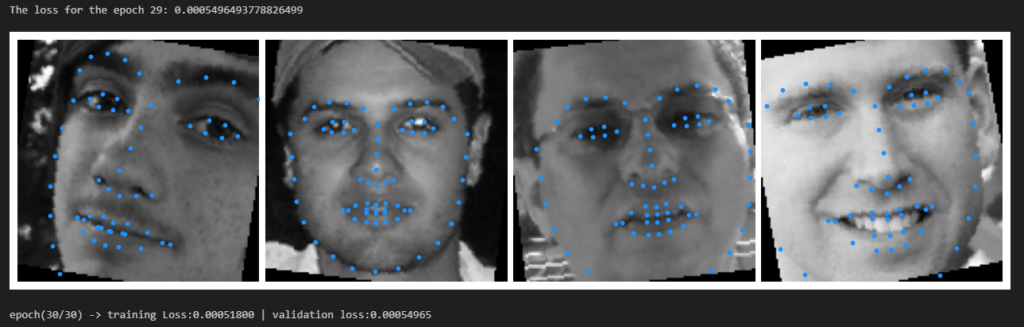

- Training Loop:

- Runs for 30 epochs.

- Computes training loss and performs backpropagation.

- Updates weights using an optimizer.

- Validates model after each epoch.

- Saves checkpoints to prevent overfitting and retain the best model.

Results